EmotionVision: AI-Driven Emotion Recognition in Facial Images

Introduction

The EmotionVision project is an innovative exploration into teaching machines to recognize human emotions from facial images. It aims to identify seven primary emotions: Angry, Disgust, Fear, Happy, Sad, Surprise, and Neutral. This technology has immense potential applications in fields such as customer behavior analysis, security systems, and video games.

The project explores two distinct approaches to emotion detection:

- Training a Convolutional Neural Network (CNN) from scratch using labeled facial emotion datasets.

- Utilizing a pre-trained model via the DeepFace library.

Both methods are evaluated for performance, scalability, and practicality.

Step 1: Data Collection and Preparation

To build a robust emotion detection system, the first critical step is obtaining a reliable dataset. For this project, we used FER2013—a publicly available dataset containing thousands of 48×48 pixel grayscale facial images, each labeled with the corresponding emotion. The images are categorized into separate directories for training and testing, simplifying preprocessing.

Data Preprocessing:

- Normalization: Each pixel value is scaled to the range [0,1] for consistent model input.

- Label Encoding: Emotion categories are converted into numerical representations.

- Data Augmentation: Techniques such as rotation, flipping, zooming, and brightness adjustments were applied to enrich the dataset and reduce overfitting. This step ensured that the model could generalize well to diverse facial orientations and lighting conditions.

Step 2: Approach 1 – Building a CNN Model

The first approach involved constructing a CNN using TensorFlow’s Keras API. CNNs are ideal for image-based tasks as they excel in identifying spatial hierarchies within visual data. Here’s how we designed the model:

Model Architecture:

- Convolutional Layers: Extract key features like edges, textures, and patterns from images.

- Pooling Layers: Reduce the spatial dimensions while retaining crucial information.

- Dense Layers: Combine extracted features for decision-making.

- Activation Functions: ReLU was used in intermediate layers, while Softmax provided probabilities for each emotion class.

- Dropout Layers: Added to prevent overfitting by randomly deactivating neurons during training.

Training:

- Input Shape: Images were resized to 48×48 pixels to match the dataset specifications and balance computational efficiency.

- Metrics: Accuracy and loss were monitored across training and validation datasets.

- Tools: Confusion matrices and classification reports were used to analyze model performance.

Results:

After training for multiple epochs, the model demonstrated a high level of accuracy in predicting emotions, particularly for distinct classes such as Happy and Sad. However, overlapping emotions like Fear and Surprise occasionally resulted in minor misclassification.

Step 3: Approach 2 – Leveraging DeepFace

The DeepFace library provides a pre-trained model capable of detecting emotions without requiring extensive training. This approach is faster and easier to implement, making it suitable for scenarios with limited computational resources.

Features of DeepFace:

- Supports emotion detection, age estimation, and gender recognition.

- Works with multiple pre-trained backends like VGG-Face and FaceNet.

- Provides robust real-time performance for live applications.

Implementation:

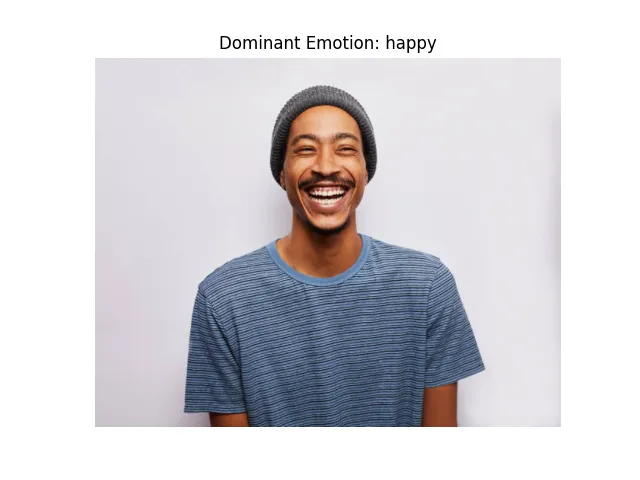

Using the DeepFace API, the system predicted emotions by passing an input image to the library. The results included:

- Emotion Probabilities: Confidence scores for each emotion.

- Dominant Emotion: The emotion with the highest confidence score.

- Face Region: Coordinates of the detected face.

- Face Confidence: Certainty of a valid face detection.

Sample Output:

{

"emotion": {

"angry": 0.00,

"disgust": 0.00,

"fear": 0.00,

"happy": 99.99,

"sad": 0.00,

"surprise": 0.01,

"neutral": 0.00

},

"dominant_emotion": "happy",

"region": {"x": 230, "y": 50, "w": 150, "h": 150},

"face_confidence": 0.91

}

Step 4: Comparison and Insights

Both approaches have distinct advantages:

| Criteria | Custom CNN | DeepFace |

|---|---|---|

| Ease of Use | Requires more effort to build and train | Plug-and-play functionality |

| Customization | Fully customizable | Limited to pre-trained features |

| Performance | High accuracy with a large dataset | Fast predictions with decent accuracy |

| Applications | Scalable for specific use cases | Ideal for general-purpose implementations |

Applications and Future Potential

The EmotionVision system is versatile and can be applied in:

- Customer Behavior Analysis: Understanding customer emotions in retail or online platforms.

- Security Systems: Identifying suspicious behavior based on emotional cues.

- Gaming Industry: Enhancing player experiences through dynamic NPC interactions.

Future Enhancements:

- Transfer Learning: Using larger pre-trained models for improved accuracy.

- Real-Time Deployment: Integrating with live camera feeds for instant emotion recognition.

- Multimodal Analysis: Combining facial emotions with voice and text sentiment for comprehensive insights.

Conclusion

EmotionVision demonstrates the power of AI in deciphering human emotions through facial recognition. By comparing custom CNNs with pre-trained solutions like DeepFace, we gained valuable insights into building efficient and scalable systems. This project is a stepping stone toward creating empathetic machines capable of understanding and responding to human feelings in diverse applications.

For more details, visit My GitHub repository.